I feel like it is time for another update on VMware Cloud Director (VCD) capabilities regarding establishing L2 VPN between on-prem location and Org VDC. The previous blog posts were written in 2015 and 2018 and do not reflect changes related to usage of NSX-T as the underlying cloud network platform.

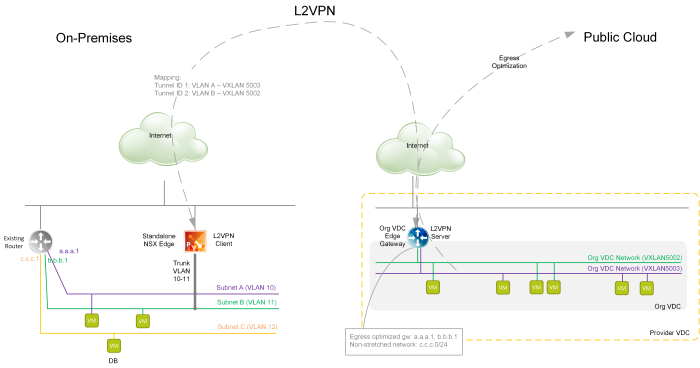

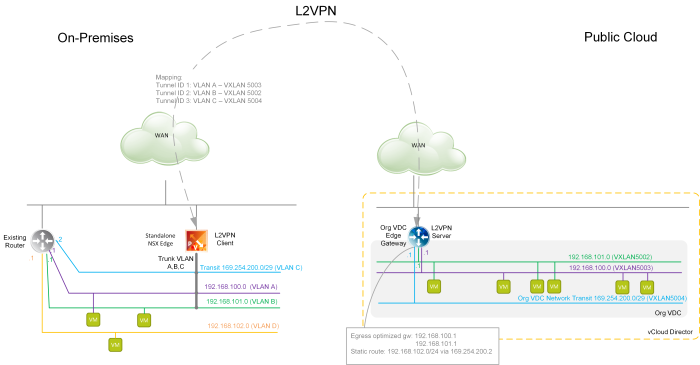

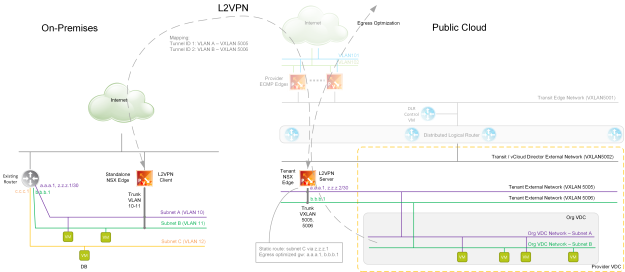

The primary use case for L2 VPN to the cloud is migration of workloads to the cloud when the L2 VPN tunnel is temporarily established until migration of all VMs on single network is done. The secondary use case is Disaster Recovery but I feel that running L2 VPN permanently is not the right approach.

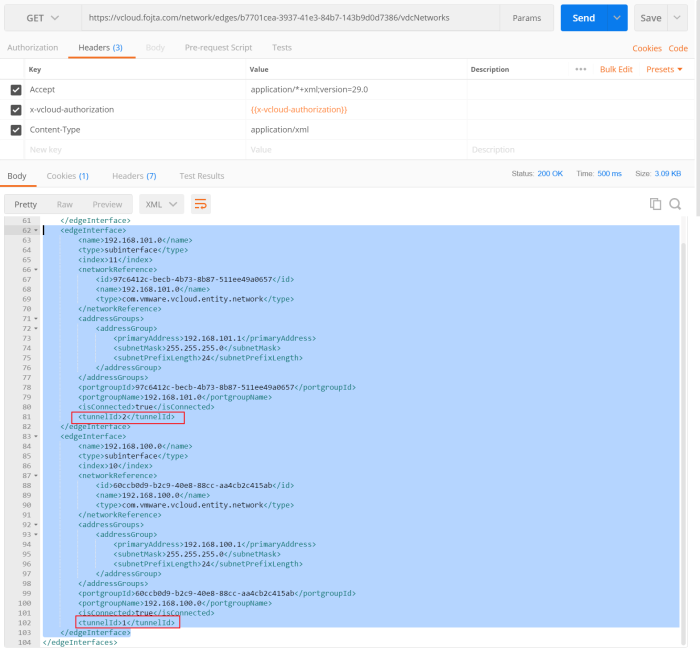

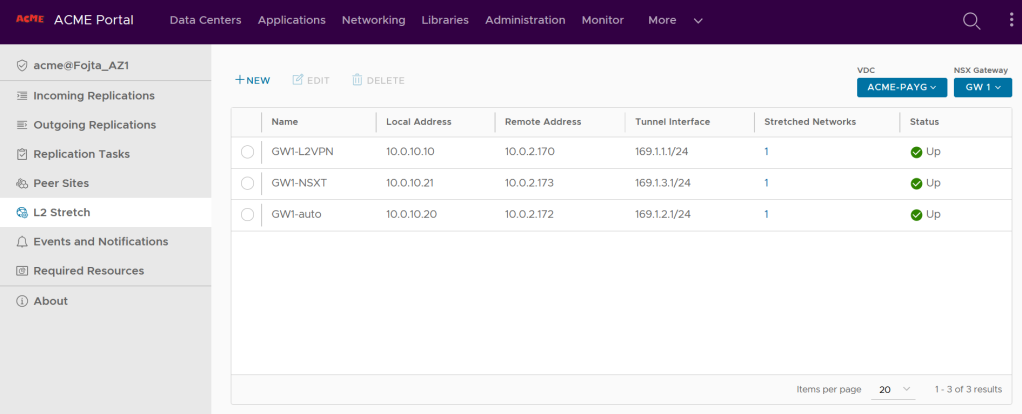

But that is not the topic of today’s post. VCD does support setting up L2 VPN on tenant’s Org VDC Gateway (Tier-1 GW) from version 10.2 however still it is hidden, API-only feature (the GUI is finally coming soon … in VCD 10.3.1). The actual set up is not trivial as the underlying NSX-T technology requires first IPSec VPN tunnel to be established to secure the L2 VPN client to server communication. VMware Cloud Director Availability (VCDA) version 4.2 is an addon disaster recovery and migration solution for tenant workloads on top of VCD and it simplifies the set up of both the server (cloud) and client (on-prem) L2 VPN endpoints from its own UI. To reiterate, VCDA is not needed to set up L2 VPN, but it makes it much easier.

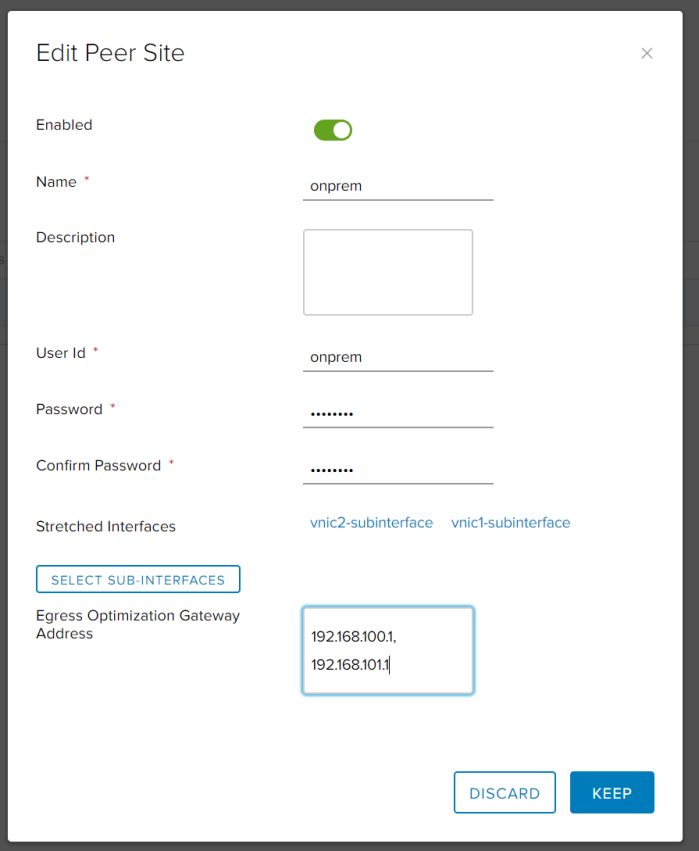

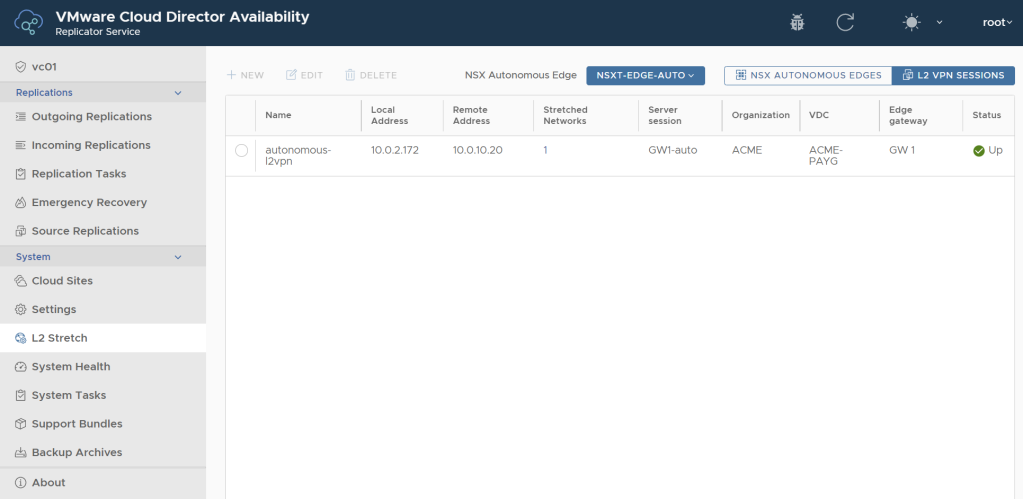

The screenshot above shows the VCDA UI plugin embeded in the VCD portal. You can see three L2 VPN session has been created on VDC Gateway GW1 (NSX-T Tier-1 backed) in ACME-PAYG Org VDC. Each session uses different L2 PVN client endpoint type.

The on-prem client can be existing NSX-T tier-0 or tier-1 GW, NSX-T autonomous edge or standalone Edge client. And each requires different type of configuration, so let me discuss each separately.

NSX-T Tier-0 or Tier-1 Gateway

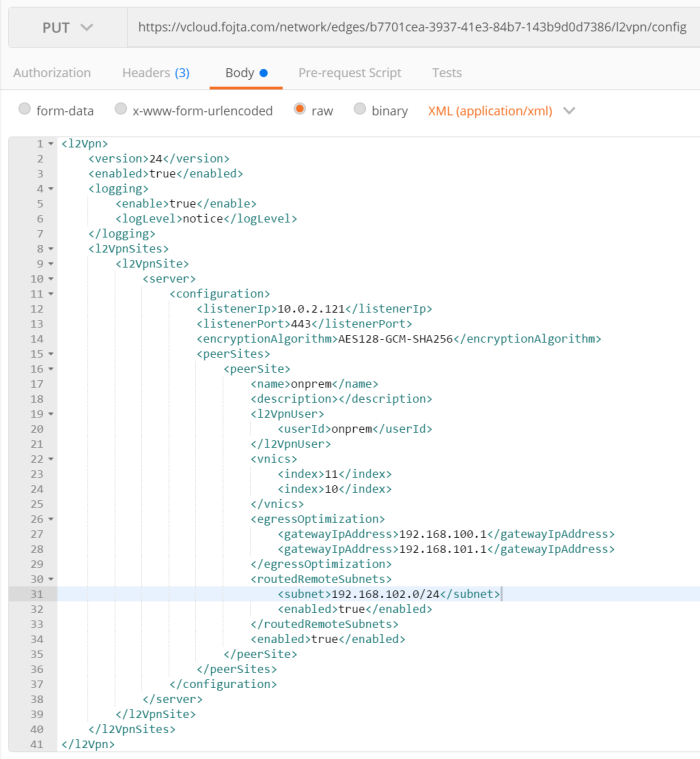

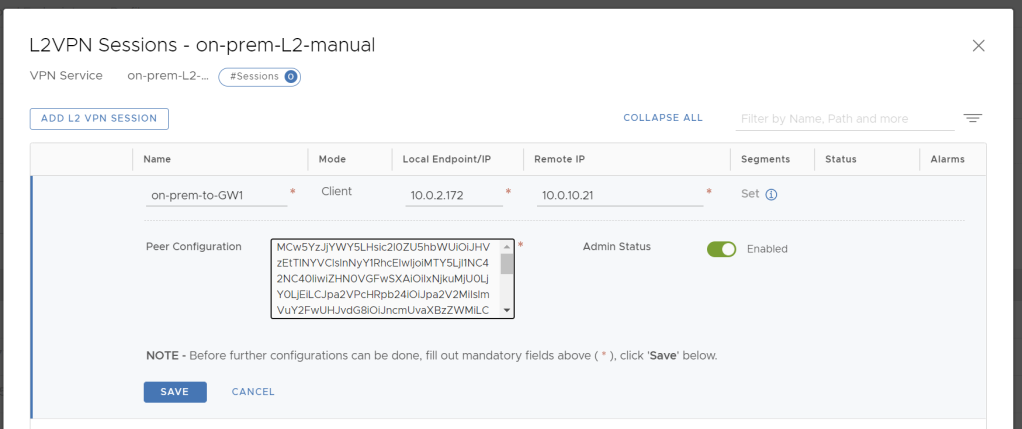

This is mostly suitable for tenants who are running existing NSX-T environment on-prem. They will need to set up both IPSec and L2VPN tunnels directly in NSX-T Manager and is the most complicated process of the three options. On either Tier-0 or Tier-1 GW they will first need to set up IPSec VPN and L2 VPN client services, then the L2VPN session must be created with local and remote endpoint IPs and Peer Code that must be retrieved before via VCD API (it is not available in VCDA UI, but will be available in VCD UI in 10.3.1 or newer). The peer code contains all necessary configuration for the parent IPSec session in Base64 encoding.

Standalone Edge Client

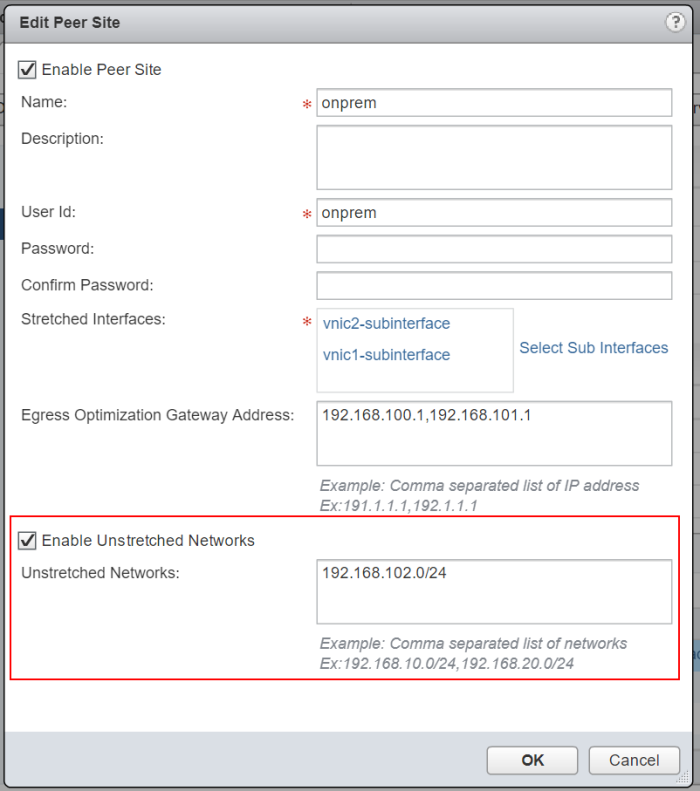

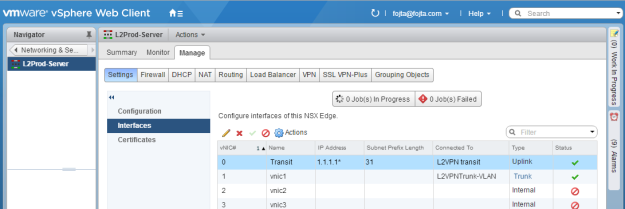

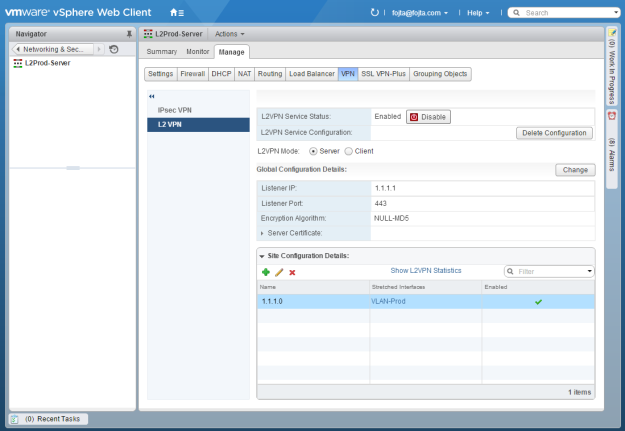

This option leverages the very light (150 MB) OVA appliance that can be downloaded from NSX-T download website and actually works both with NSX-V and NSX-T L2 VPN server endpoints. It does not require any NSX installation. It provides no UI and its configuration must be done at the time of deployment via OVF parameters. Again the peer code must be provided.

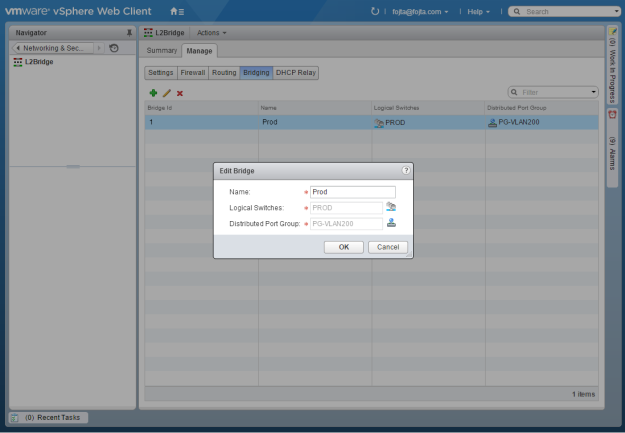

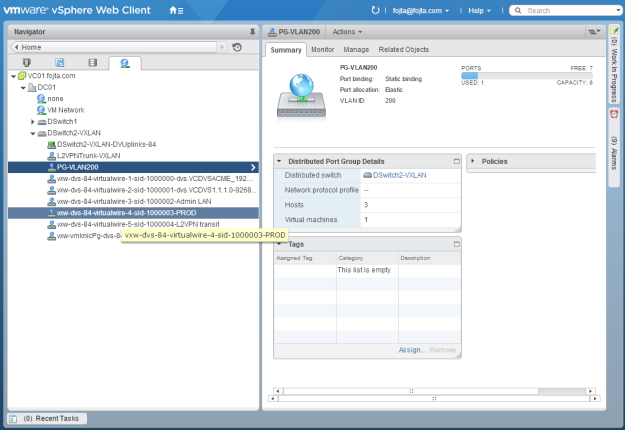

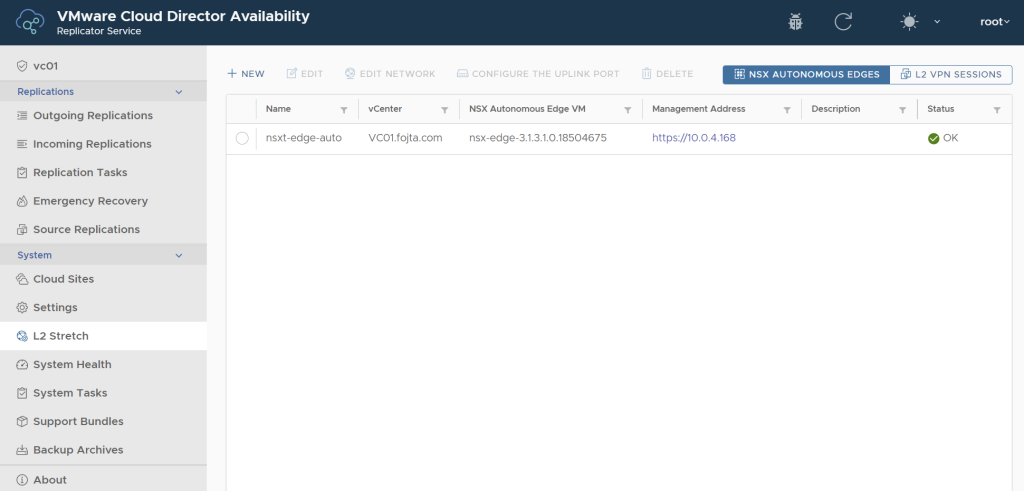

Autonomous Edge

This is the prefered option for non-NSX environments. Autonomous edge is a regular NSX-T edge node that is deployed from OVA, but is not connected to NSX-T Manager. During the OVA deployment Is Autonomous Edge checkbox must be checked. It provides its own UI and much better performance and configurability. Additionally the client tunnel configuration can be done via the VCDA on-premises appliance UI. You just need to deploy the autonomous edge appliance and VCDA will discover it and let you manage it from then via its UI.

This option requires no need to retrieve the Peer Code as the VCDA plugin will retrieve all necessary information from the cloud site.