Update January 28, 2021: Version 1.2 of VMware NSX Migration for VMware Cloud Director has been released with support for VMware Cloud Director 10.2 and its new networking features (load balancing, distributed firewall, VRF) as well as enhancements in migrations of isolated Org VDC networks with DHCP and multiple Org VDC Edge GWs and external networks. Roll back can now be performed at any point.

VMware Cloud Director as a cloud management solution is built on top of the underlying compute and networking platforms that virtualize the physical infrastructure. For the compute and storage part VMware vSphere was always used. However, the networking platform is more interesting. It all started with vShield Edge which was later rebranded to vCloud Networking and Security, Cisco Nexus 1000V was briefly an option, but currently NSX for vSphere (NSX-V) and NSX-T Data Center are supported.

VMware has announced the sunsetting of NSX-V (current end of general support is planned for (January 2022) and is fully committed going forward to the NSX-T Data Center flavor. The two NSX releases while similar are completely different products and there is no direct upgrade path from one to the other. So it is natural that all existing NSX-V users are asking how to migrate their environments to the NSX-T?

NSX-T Data Center Migration Coordinator has been available for some time but the way it works is quite destructive for Cloud Director and cannot be used in such environments.

Therefore with VMware Cloud Director 10.1 VMware is releasing compatible migration tool called VMware NSX Migration for VMware Cloud Director.

The philosophy of the tool is following:

- Enable granular migration of tenant workloads and networking at Org VDC granularity with minimum downtime from NSX-V backed Provider VDC (PVDC) to NSX-T backed PVDC.

- Check and allow migration of only supported networking features

- Evolve with new releases of NSX-T and Cloud Director

In other words, it is not in-place migration. The provider will need to stand up new NSX-T backed cluster(s) next to the NSX-V backed ones in the same vCenter Server. Also the current NSX-T feature set in Cloud Director is not equivalent to the NSX-V. Therefore there are networking features that cannot in principle be migrated. To see comparison of the NSX-V and NSX-T Cloud Director feature set see the table at the end of this blog post.

The service provider will thus need to evaluate what Org VDCs can be migrated today based on existing limitations and functionality. Start with the simple Org VDCs and as new releases are provided migrate the rest.

How does the tool work?

- It is Python based CLI tool that is installed and run by the system administrator. It uses public APIs to communicate with Cloud Director, NSX-T and vCenter Server to perform the migrations.

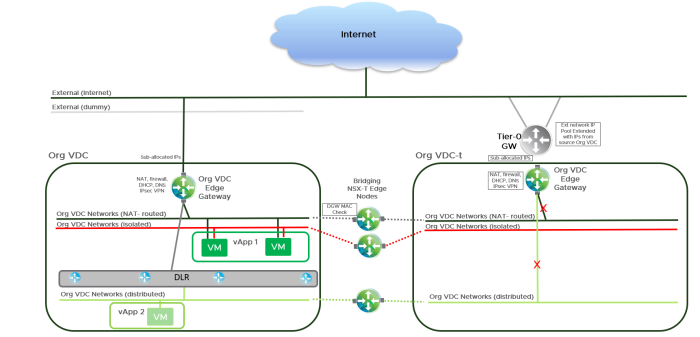

- The environment must be prepared is such way that there exists NSX-T backed PVDC in the same vCenter Server as the source NSX-V PVDC and that their external networks are at the infrastructure level equivalent as existing external IP addresses are seamlessly migrated as well.

- The service provider defines which source Org VDC (NSX-V backed) is going to be migrated and what is the target Provider VDC (NSX-T backed)

- The service provider must prepare dedicated NSX-T Edge Cluster whose sole purpose is to perform Layer-2 bridging of source and destination Org VDC networks. This Edge Cluster needs one node for each migrated network and must be deployed in the NSX-V prepared cluster as it will perform VXLAN port group to NSX-T Overlay (Geneve) Logical Segment bridging.

- When the tool is started, it will first discover the source Org VDC feature set and assess if there are any incompatible (unsupported) features. If so, the migration will be halted.

- Then it will create the target Org VDC and start cloning the network topology, establish bridging, disconnect target networks and run basic network checks to see if the bridges work properly. If not then roll-back is performed (bridges and target Org VDC are destroyed).

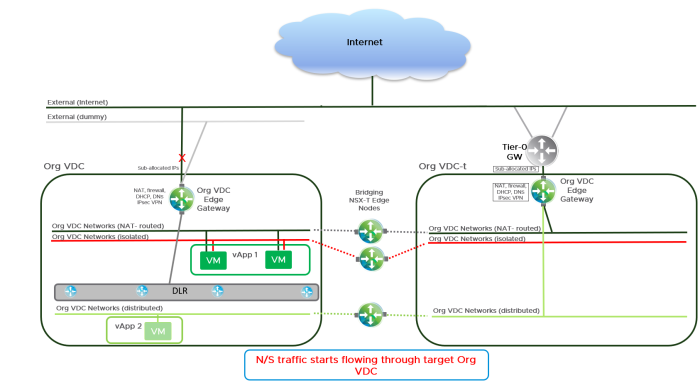

- In the next step the north / south networking flow will be reconfigured to flow through the target Org VDC. This is done by disconnecting the source networks from the gateway and reconnecting the target ones. During this step brief N/S network disruption is expected. Also notice that the source Org VDC Edge GW needs to be connected temporarily to a dummy network as NSX-V requires at least one connected interface on the Edge at all times.

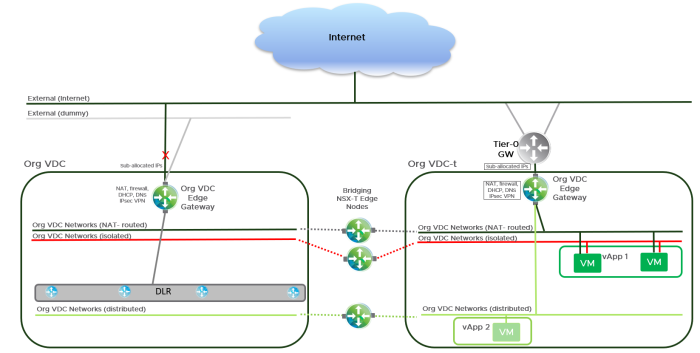

- Each vApp is then vMotioned from the source Org VDC to the target one. As this is live vMotion no significant network/compute disruption is expected.

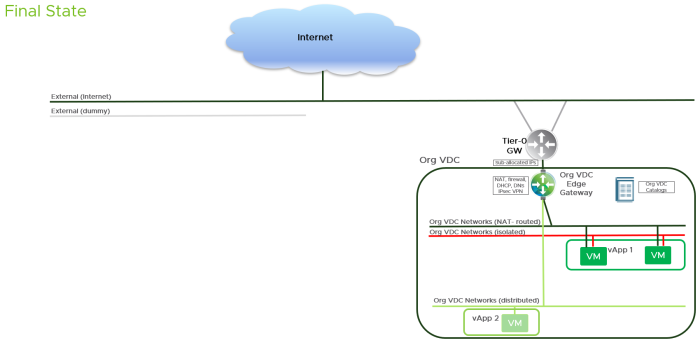

- Once the provider verifies the correct functionality of the target VDC she can manually trigger the cleanup step that migrates source catalogs, destroys bridges and the source Org VDC and renames the target Org VDC.

- Rinse and repeat for the other Org VDCs.

Please make sure you read the release notes and user guide for the list of supported solutions and features. The tool will rapidly evolve – short roadmap already includes pre-validation and roll-back features. You are also encouraged to provide early feedback to help VMware decide how the tool should evolve.

Thank alot for the post!

Is it also possible to migrate vlan backed port groups (not vxlan)?

Currently not, VCD needs to provide support to enable promiscuous mode on VLAN backed networks which is needed for bridging.

Thank you for the answer Tomas. For us as service provider is this very important, we have alot of customers who are running outside of VXLAN in normal VLANs on vCloud Director..

Does the migration tool support to migrate “vSphere port group-backed” network? If not, when will the migration tool support to migrate “vSphere port group-backed” network?

Do you mean networks created from Network Pool of type “Port groups backed’ Frankly, there was no intention of supporting this as it exists only for legacy reasons and AFAIK only legitimate use for the port group backed NP was with 3rd party switches (Cisco Nexus, IBM, …). What is your use case?

Or do you mean VLAN backed NP? Yes, that one will be supported.

For Network Pool type, there are “VXLAN-backed”, “VLAN-backed”, “Port groups backed” and “Geneve backed”. We are using “Port groups backed” Network Pool at our existing deployment. Does the migration tool supports the “Port groups backed” as source Network Pool? If not, when will it supports? For the target network pool, which Network Pool type does the migration tool supports and when?

We currently support only VXLAN-backed. VLAN-backed will come in the next release.

We do not plan to support Port groups backed as we do not see much use as I explained above. What is your use case? Why don’t you use VLAN-backed instead?

We only use “Port group based” network pool. Our network team want to have tight control which VLAN ID allocated to which Organization and they request us to use “Port group based” Network Pool. We created the vSphere port groups at vCenter VDS in advance. Each port group contains one VLAN ID. Then, let the operator to select the port groups into the per-OrgVDC network pool, based on the VLAN ID.

+1

Same for our datacenter

Hi T, is there an alternative method as opposed to an additional capital outlay for a new NSX-T prepared set of hosts solely used for migration purposes? This will be difficult to justify for budgetary purposes.

Not necessarily as you can do the migration in rolling fashion swinging ESX hosts between clusters as the source capacity is freed up.

Any plans to support 31 bits netmask length for VCD networks?

RFC 3021 from year 2000.

Can you provide more details on the use case? Which network (vApp, Org VDC, external) and is that for NSX-V or NSX-T backed PVDC?

NSX-V. In two-tier ESG hierarchy orgVDC networks which acting as a transit point-to-point networks between tenant-level ESG (exposed as an edge in VCD) and perimeter level ESG (not part of VCD view).

Conceptually role of those transit networks are very similar to NSX-T auto plumbed transit networks between T0 and T1 gateways.

PE Perimeter ESG Tenant ESG VM-LAN

– In some cases IP level tracebility is required end to end and public IPv4 addressing has to be used all the way to the tenant VM.

Thanks. Considering this is already possible in NSX-T backed PVDCs I do not think this will be supported for NSX-V external networks.

Hi Thomas! Is this script supported by NSX-T 3.0 version or only NSX 2.5.1? I tried to migrate, but the workflow crashed in the “Create Uplink Profile” step. In the logs I see this message “List index out of range”

Not yet. New version will be released soon.

Is there news about NSX-T migration tools for vCloud 10.2 ?

In the works.

Hi,

Do you have at least approximate date when VMware NSX Migration for VMware Cloud Director 1.2 is going to be available?

Hopefully soon. It has some dependencies that must be resolved first.

Hi,

Any news/updates on “VMware NSX Migration for VMware Cloud Director 1.2”?

I’ve been waiting more that two months and don’t know how/when to plan my migration…

In the same boat as Serhii. We need to start our migration from NSX-V backed vcloud director instances to NSX-T as there is only a year of support left on NSX-V (1/16/22). The 1.1 version of the vcdnsxmigrator tool is failing at the vapp move stage and we suspect it is due to the fact we are using NSX-T 3.1 and the tool only supports up to 3.0. We need the VRF functionality that 3.1 brings so downgrading is not an option. Please provide a better timeline as to when version 1.2 will be released for the vcdnsxmigrator tool. Or can you recommend any other tools that will allow us to migrate? We are testing with Cloud Director Availability… but the fact the virtual machines need to be restarted on the target PVDC is less than desirable. Especially since we have shared storage across both PVD… ideally we should be able to complete this move without any downtime to the virtual machines at the very least. Any updates would be helpful.

It has been released yesterday: https://my.vmware.com/en/web/vmware/downloads/info/slug/datacenter_cloud_infrastructure/vmware_cloud_director/10_2#drivers_tools

Whoa! What a coincidence!

Thank for your reply.

Hi Tomas,

If I have installed only the NSX-V Manager without installing VIBs on hosts, is there any possible way to use the same cluster for the NSX-T migration?

Yes. If the cluster is not NSX-V prepared you can prepare it for NSX-T. The NSX-V Manager can run anywhere.

Is it supported to have one cluster for VM workloads ant another cluster only for nsx-t edge routers, because default edge is consuming 4vcpu and 8gb ram. Currently got 260nsx-v edges and im not very happy with idea to migrate everything to new cluster and raise its overbook 🙂

The architecture of NSX-V and T gateways is quite different. NSX-T gateways are deployed into NSX-T Edge Cluster(s) where each is a collection of (up to 10) Edge nodes that can be deployed anywhere. NSX-T Edge Cluster is not a vSphere cluster. Edge nodes can even be physical. And there is no 1:1 relationship between Edge node and Tier-1 gateway.

Using the v-t migration tool released on 28/01/21, with respect to L2 bridging from vxlan to Geneve, if i had 100 networks which included varieties of Org vDC networks as well as vApp networks – as i understand my edge cluster requires 100 edge transport nodes. Is there any development in the pipeline to allow for multiple L2 networks to be bridged without the need for a 1-1 relationship between edge nodes and networks to migrate? Thanks in advance.

Got same problem, some organizations got only vxlans, but some is using like L2 networks or both L2 and vxlan.. But my numbers much bigger, about 500 L2 vlans connected to clients 🙂

(Direct network) VLANs do not need to be bridged. You can just import them to the NSX-T backed Org VDC and migrate vApps directly from VCD via Move vApp API.

vApp networks are not bridged (they are migrated as part of the whole vApp object). What is the number of Org VDC networks (routed, isolated) that you have in *single* Org VDC? We currently do not plan to support a different bridging technology.

Sorry, figure used earlier was an example. We have a max of 8 routed/isolated per single Org vDC vxlan backed networks, so guess i need to spin up 8 edges which is not so bad for myself. The vApp network bridging question arose from a ‘feature friday v-t’ video suggesting they would also require bridging, however thanks for the clarification.

Hi.

Hi. We are in the process of migrating a NSX-V to NSX-T, vCloud Director instalation. We have the following versions:

vCloud Director: 10.2

NSX-V: 6.4.8

NSX-T: 3.1

vSphere: 7.0

We have an error with the ver 1.2 of the: “VMware NSX Migration for VMware Cloud Director” tool. We get the following error when we execute the tool to migrate a simplre vCD Organization, with a single edge and network. 2021-02-10 16:39:31,711 [nsxtOperations]:[updateEdgeTransportNodes]:234 [INFO] | Adding Bridge Transport Zone to Bridge Edge Transport Nodes.

2021-02-10 16:39:32,025 [vcdNSXMigrator]:[run]:644 [ERROR] | list index out of range

As mentioned we are trying ver 1.2 of the tool (Windows version), and we are getting an error: [vcdNSXMigrator]:[run]:644 [ERROR] | list index out of range.

This error seems to be related to the Bridge Edge Cluster (EdgeClusterName:) variable on the UserImput.yml. This varible is listed as “list” , but on version 1.1 of the tool it is listed as “string”. We are trying the tool on simple Org with only one Route-internal network. So for the tool we only need 1 Bride-edge-cluster. The tool preCheck is all OK, but we can’t get passed this “list index out of range” error.

WE haven’t tried version 1.1 of the tool, cause we are working with vCD ver 10.2 and NSX-T 3.1 and vSphere 7.0. Not sure if ver 1.1 is compatible.

Any ideas?

We are seeing the exact same behavior in our lab. Exact same software versions. The 1.1 version of the vcdnsxmigrator doesn’t list support for vcloud 10.2.

We were able to get past the “list index out of range” error by making sure the switch name for the Overlay TZ and the switch name for the Edge node matched, also the Bridge TZ must have a different switch name. In our case the Overlay TZ and the Edge node didnt match and the Bridge TZ did match the Overlay switch name. We had to delete the bridge edge node, redeploy, this time matching the switch name currently on the Overlay TZ. Then deleted the Bridge TZ and used the API to create a new one with a name that didn’t match the Overlay TZ and Bridge Edge node. (thanks to @woueb for helping us get past this one)

Unfortunately, after getting past that we ran into another issue during the next step which is “Attaching bridge endpoint profile to logical switch”. After looking at the logs and making the same api call as in the logs, we found that the org vdc network name didn’t match between vCloud and NSX-T. In vCloud the network was called “networkname-v2t-uuid”… where as in NSX-T the segment was called “networkname-v-uuid”. I was able to manually change the segment name to include the “2t” piece and was then able to proceed past that section. Note that nsx-t org vdc network/segment is created as part of the vcdnsxmigrator tool process. I have to do more testing to determine if this is an issue with the tool or possibly something with my naming scheme or something.

Currently we are to the “Moving vApp – to target Org VDC” step and we are getting another error and this once has us stuck again. “Invalid control character at: line 1 column 1770 (char 1769)

“2021-02-15 11:03:12,331 [connectionpool]:[_make_request]:433 [DEBUG] | https://192.x.x.x:443 “GET /cloudapi/1.0.0/vdcs/urn:vcloud:vdc:e337ea74-ad64-45f3-b593-adb2ba5df4ac/computePolicies?filter=isSizingOnly==true&links=true HTTP/1.1” 200 None

2021-02-15 11:03:12,333 [vcdOperations]:[getVmSizingPoliciesOfOrgVDC]:1149 [DEBUG] | Retrieved the VM Sizing Policy of Org VDC urn:vcloud:vdc:e337ea74-ad64-45f3-b593-adb2ba5df4ac successfully

2021-02-15 11:03:12,333 [vcdOperations]:[createMoveVappVmPayload]:748 [DEBUG] | Getting VM – yVM details

2021-02-15 11:03:12,361 [utils]:[createPayload]:167 [DEBUG] | Successfully created payload.

2021-02-15 11:03:12,387 [utils]:[createPayload]:167 [DEBUG] | Successfully created payload.

2021-02-15 11:03:12,414 [utils]:[createPayload]:167 [DEBUG] | Successfully created payload.

2021-02-15 11:03:12,414 [threadUtils]:[_runThread]:73 [ERROR] | Invalid control character at: line 1 column 1770 (char 1769)

Traceback (most recent call last):

File “src\commonUtils\threadUtils.py”, line 64, in _runThread

File “src\core\vcd\vcdValidations.py”, line 46, in inner

File “src\core\vcd\vcdOperations.py”, line 2434, in moveVappApiCall

File “json\__init__.py”, line 357, in loads

File “json\decoder.py”, line 337, in decode

File “json\decoder.py”, line 353, in raw_decode””

Did you find a solution to this? We just hit it.

yes. It was due to a description on one of the vm’s. We were using tinylinuxVMs that had a long default description in the VM. We deleted that and got past the error above.

Can you please open tickets for the issues?

Ticket was opened this morning, with screen shots and logs. The last ticket i opened with support, the engineer had never heard of the vcdnsxmigrator tool though… fyi.

VMware Ticket # 21195821902

Likewise today we escalated with: Support Request # 21195942302

Likewise Support Request # 21195942302

Hi.

FYI

We managed to get the script working on a simple Org with one NSX-V Edge and 2 routed internal networks (some NATs, Firewalling and IPSEC L2L VPN Policy Based). We did not encounter any errors once the ” [ERROR] | list index out of range” was resolved.

This was resolved with Jared’s adviced.

– We recreated the Bridge-TZ via RestApi with new host-swtich-name (diffrent from the defaul name)

– We recreated the bridge-edge-nodes with the same host-switch-name as the Overlay-TZ host-switch-name.

(The default host-switch-name is: nsxDefaultHostSwitch)

(Thanks Jared)

(We have heard from VMWARE Support, apart from Log request, but we will update with our situation).

We did not execute a post “cleanup” with the script.

We tried a manual rollback… of one vAPP/VM to see if it was possible (Not sure if this would create future problem if we had to do a full rollback)

For a tested manual rollback we did the following:

– Power off VM

– Remove Dummy Network from NSX-V Edge (We did this from NSX-V manager)

– Reenabled External Netrwok on NSX-V , changed External IP to a new temp IP (We had to reconnect original External interface from NSX-V manager)

– Recreated Internal – Routed Networks an connect to NSX-V (the original NSX-V internal networks…were changed from routed to isolated, and could not be used)

– Moved VM from NSX-T VDC to new vAPP in NSX-V VDC

– Reconnect to new NSX-V Internal-Routed Network.

We tried this just to get an idea of we had to execute a manual rollback, what would be working particularly if we did not want to recreate original NAT, Firewalling, and IPSEC VPN config on the original NSX-V Edge.

As mentioned we did have to execute a few tasks on the NSX-V manager on the original NSX-V edge.

(Not sure what future impact it may have. We did try a redploy of the edge after those changes, to leave it as it was. It seemed to be working ok afterwards).

Any suggestions on how to best manage a manual rollback or a manual migration (if needed) are welcomed.

Thanks.

We had a problem in the migration of a VDC because a spanish character (á) in the description of the VDC. The migration failed with an 400 HTTP error in the creation of a Metadata. It stated that the problem was with a UTF-8 character.

Take this in account for foreign country users that can use that type of character for descriptions.

Thanks for reporting. We will investigate the issue.

Maybe someone got same error and have sollution? We are on vsphere 7, nsxt 3.1.1 and vcd 10.2.1

2021-03-05 14:41:12,937 [nsxtOperations]:[verifyBridgeConnectivity]:465 [ERROR] | Bridging Connectivity checks failed. Source Edge gateway MAC address could not learned by edge nodes

2021-03-05 14:41:12,939 [vcdNSXMigrator]:[run]:644 [ERROR] | Bridging Connectivity checks failed. Source Edge gateway MAC address could not learned by edge nodes

Traceback (most recent call last):

File “src\vcdNSXMigrator.py”, line 599, in run

File “src\core\vcd\vcdValidations.py”, line 93, in wrapped

File “src\core\nsxt\nsxtOperations.py”, line 59, in inner

File “src\core\nsxt\nsxtOperations.py”, line 49, in inner

File “src\core\nsxt\nsxtOperations.py”, line 466, in verifyBridgeConnectivity

Exception: Bridging Connectivity checks failed. Source Edge gateway MAC address could not learned by edge nodes

2021-03-05 14:41:12,942 [vcdNSXMigrator]:[run]:645 [CRITICAL] | VCD V2T Migration Tool failed due to errors. For more details, please refer main log file C:\vcdNSXMigrator\logs\VCD-NSX-Migrator-Main-05-03-2021-14-30-22.log

The migration tool is connecting to each bridging edge node via CLI to verify L2 connectivity by querying MAC address of the default GW. It uses the same admin password as the one specified in YAML spec for NSX-T manager. Make sure it is the same one for all the edge nodes as well.

Hi! Did you manage to resolve this? I am running into the same issue – the bridge tunnel doesn’t seem to come up or something else networking related that prevents the tunnel from being set up.

We’ve hit the same issue also.

Any suggestions?

It turns out there is a bug in the latest version of the script (1.3). It will be fixed in the next release.

Workaround:

1. Locate the logical segment in NSXT and open it in edit mode, then click on the “Edge Bridges 1”, this will take you the edge bridge section.

2. Edit the edge bridge, you will see some VLAN ID in VLAN section. Edit that and set VLAN to 0.

3. After that continue the migration, bridging verification will pass now and will continue further.

Hi

I have problem to migrate vdc using migration tool. I always get following warning

“[vcdNSXMigrator]:[run]:601 [WARNING] | Skipping the NSXT Bridging configuration and verifying connectivity check as no source Org VDC network exist”

and then error

Failed to move vApp – test with errors [ d69cd99c-9183-4157-bb6d-d4cbebb95ed3 ] Provided network(s) [DMZ-v2t] was not defined as a vApp Network

Can someone please explain what does it mean that migrated network was not defined as vApp network.

Thanks

What is your Org VDC network topology?

One edge gateway connected to an external network with one routed network (DMZ). One vAPP in DMZ. Very simple scenario. I just wanted to test how migration works but I always get mentioned error.

That is indeed weired. Could you please file SR so we can review logs, etc.?

Hi,

Just want to share my experience.

I was able to migrate 5 tenants in my test environment, but my journey was very bumpy.

1. At first I got this error:

2021-04-22 14:38:07,282 [vcdNSXMigrator]:[run]:644 [ERROR] | expected string or bytes-like object

Traceback (most recent call last):

File “src\vcdNSXMigrator.py”, line 497, in run

File “src\vcdNSXMigrator.py”, line 235, in inputValidation

File “c:\users\vmware\appdata\local\programs\python\python38\lib\re.py”, line 191, in match

TypeError: expected string or bytes-like object

2021-04-22 14:38:07,285 [vcdNSXMigrator]:[run]:645 [CRITICAL] | VCD V2T Migration Tool failed due to errors. For more details, please refer main log file

As it turned out it was a copy/paste from Cloud Director web interface to the UserInput.yml. There were some hidden special characters that could not be seen in the Notepad.

2. Next, I got:

2021-04-28 13:16:21,586 [nsxtOperations]:[verifyBridgeConnectivity]:450 [DEBUG] | Bridge ports mac sync table – [‘MAC-SYNC Table’, ‘MAC : 00:50:56:85:36:98’, ‘VNI : 71682’, ‘VLAN : 4096’, ‘bridge-port-uuid: b150131d-8381-42dd-954f-c388bd9d126e’, ‘ ‘, ‘MAC : 00:50:56:ab:6b:8b’, ‘VNI : 71682’, ‘VLAN : 4096’, ‘bridge-port-uuid: b150131d-8381-42dd-954f-c388bd9d126e’, ”, ”]

2021-04-28 13:16:21,586 [nsxtOperations]:[verifyBridgeConnectivity]:465 [ERROR] | Bridging Connectivity checks failed. Source Edge gateway MAC address could not learned by edge nodes

2021-04-28 13:16:21,587 [vcdNSXMigrator]:[run]:644 [ERROR] | Bridging Connectivity checks failed. Source Edge gateway MAC address could not learned by edge nodes

Traceback (most recent call last):

File “src\vcdNSXMigrator.py”, line 599, in run

File “src\core\vcd\vcdValidations.py”, line 93, in wrapped

File “src\core\nsxt\nsxtOperations.py”, line 59, in inner

File “src\core\nsxt\nsxtOperations.py”, line 49, in inner

File “src\core\nsxt\nsxtOperations.py”, line 466, in verifyBridgeConnectivity

Exception: Bridging Connectivity checks failed. Source Edge gateway MAC address could not learned by edge nodes

2021-04-28 13:16:21,590 [vcdNSXMigrator]:[run]:645 [CRITICAL] | VCD V2T Migration Tool failed due to errors. For more details, please refer main log file

Troubleshooting this error, I noticed that fp-eth2 interface was down on one of the two nodes of the Edge Bridge cluster. I manually connected the required port-group to the Edge transport node via vCenter and it did the trick.

3. Then I got into a situation where all the script connection checks were successful and the migration was completed, but I didn’t have an external network connection from/to the VMs. This time it was Tier-0 Gateway Route Re-distribution settings, I had forgotten to enable static routes and NAT IP advertisement (only Connected Segments were advertised).

So be careful, don’t forget to enable Route Re-distribution advertisement on Tier-0 Gateway!

4. Next, I got:

021-04-28 16:55:53,393 [vcdNSXMigrator]:[run]:644 [ERROR] | Failed to disable Source Org VDC – [ a70a0b5d-a3f6-40e3-9ab9-0b17f9d28bc6 ] The default storage policy for the specified VDC cannot be disabled. Please select a new default storage policy first.

Traceback (most recent call last):

File “src\vcdNSXMigrator.py”, line 577, in run

File “src\core\vcd\vcdValidations.py”, line 93, in wrapped

File “src\core\vcd\vcdValidations.py”, line 81, in inner

File “src\core\vcd\vcdValidations.py”, line 71, in inner

File “src\core\vcd\vcdValidations.py”, line 2905, in preMigrationValidation

File “src\core\vcd\vcdValidations.py”, line 46, in inner

File “src\core\vcd\vcdValidations.py”, line 916, in disableOrgVDC

Exception: Failed to disable Source Org VDC – [ a70a0b5d-a3f6-40e3-9ab9-0b17f9d28bc6 ] The default storage policy for the specified VDC cannot be disabled. Please select a new default storage policy first.

2021-04-28 16:55:53,402 [vcdNSXMigrator]:[run]:645 [CRITICAL] | VCD V2T Migration Tool failed due to errors. For more details, please refer main log file

Just be sure that you have more than one SP per OvDC you are going to migrate.

5. In all my “successful” migrations I always got this error:

2021-04-28 18:18:03,336 [vcdValidations]:[_checkTaskStatus]:2386 [DEBUG] | Checking status for task : vdcMoveVapp

2021-04-28 18:18:03,336 [vcdValidations]:[_checkTaskStatus]:2397 [DEBUG] | Task vdcMoveVapp is in Error state [ 1b33288b-543d-410d-8262-0736fbec7797 ] Internal Server Error

2021-04-28 18:18:03,336 [threadUtils]:[_runThread]:73 [ERROR] | [ 1b33288b-543d-410d-8262-0736fbec7797 ] Internal Server Error

Traceback (most recent call last):

File “src\commonUtils\threadUtils.py”, line 64, in _runThread

File “src\core\vcd\vcdValidations.py”, line 46, in inner

File “src\core\vcd\vcdOperations.py”, line 2447, in moveVappApiCall

File “src\core\vcd\vcdValidations.py”, line 46, in inner

File “src\core\vcd\vcdValidations.py”, line 2398, in _checkTaskStatus

Exception: [ 1b33288b-543d-410d-8262-0736fbec7797 ] Internal Server Error

and duplicated vApps with suffix “-generated” in the their name were created. The migrated VMs were split between two vApps, original one and “-generated”.

6. On average, for a simple tenant with several VMs and two OrgVDC routed networks, the downtime for external network switching was about 6 minutes.

Is it normal? How much did you have?

Hi, apologies i’m not sure i fully understand this paragraph so wanted to clarify as i seem to be confusing myself. Having created a new NSX-T vsphere cluster, i am reading i need to create a dedicated NSX-T edge cluster in the NSX-V prepp’d ‘old’ cluster for bridging purposes. Is this in addition to a NSX-T edge cluster which hosts the logical components providing centralized services?

‘The service provider must prepare dedicated NSX-T Edge Cluster whose sole purpose is to perform Layer-2 bridging of source and destination Org VDC networks. This Edge Cluster needs one node for each migrated network and must be deployed in the NSX-V prepared cluster as it will perform VXLAN port group to NSX-T Overlay (Geneve) Logical Segment bridging’

NSX-T Edge Cluster is a logical object that groups similar NSX-T Edge Nodes together. It is not a vSphere Cluster. Also you do not really deploy NSX-T Edge Cluster. You deploy NSX-T Edge Nodes and group them to an Edge Cluster.

So you will need:

– (at least) two vSphere clusters, one for NSX-V backed PVDC, one for NSX-T backed PVDC

– (at least) two NSX-T Edge Clusters, one for running Tier-0 and Tier-1 GWs, the other for V to T bridging purposes.

The Tier-0/1 GW NSX-T Edge Cluster can run virtually anywhere (on any vSphere cluster). The bridging NSX-T Edge Cluster must be deployed in the NSX-V vSphere Cluster as the Edge nodes must be able to connect to VXLAN port groups.

Hi, with the latest release of the migration tools (1.3.1) there is no support for routed vApp Networks. If there a process guide available for doing this manually outside of the migration tool?

Routed vApp support feature is still on migration tool roadmap, however it will need VCD 10.3.2 that will have proper MoveVApp API support.

Hi Tom,

I have to say thank you for all your work, you have helped me out many times!

I have a few questions related to this design as we are looking at implementing something very similar: https://fojta.files.wordpress.com/2021/01/internet-with-mpls.png

1) Is the T0-internet GW the parent to the T0-VRFs depicted, or a separate T0?

2) Can you provide any further detail on how you utilised overlay networks between the ETNs of the VRFs and the T0-internet GW?

Re 1) It must be a seprate T0

Re 2) You just create individual overlay segments and use them for the transits between VRF and the separate T0. See: Figure 4-54 in the design guide: https://nsx.techzone.vmware.com/resource/nsx-t-reference-design-guide-3-0#_Toc503250664

Thanks very much Tom. I am continuing the testing with this before rolling out.

One other quick question. In the same scenario, am I right to assume that the uplinks from the Tier0-parent are there mainly to meet the requirement of the Tier0 – especially in the case of the MPLS with VLANs? I expect that they would not be doing anything meaningful otherwise?

Hi Tom, we would appreciate it if you could help us with a question regarding NSX-T migration with VCD.

We are currently conducting tests to migrate from NSX-V to NSX-T using the migration tool. Some of our customers have load balancers configured on NSX-V edges, and we have deployed NSX-LB with AVI Networks, which assumes the load-balanced services.

We have encountered an issue during the migration of a test customer with a simple load-balanced service. This load balancing (LB) service has two web servers in the same subnet, and we have configured an IP address for the LB in the same subnet as the two web servers.

We are using IP Spaces for the T1 Gateway. When we attempted to migrate this testing customer, we encountered an issue in the ‘services’ phase when the migration tool tried to configure the load balancer on the NSX-T side.The specific error was: ‘Cannot use IP 36.36.36.2 within IP Space 36.36.36.1/24 as the IP is not allocated yet.’ In the ‘topology’ phase, the migration tool creates a Private IP Space for the network and configures an IP prefix with the subnet ‘36.36.36.0/24.’ This causes the script to fail because the migration tool cannot allocate an IP address from this private IP space.

We have some customers with this scenario, and we are unsure how to resolve this issue.

Do you have any ideas on how we could migrate this kind of load-balanced service?

Thank you and congratulations for your great contribution.

I suggest to open SR ticket to investigate if this is a bug in the tool. You alternatives are not to use IP Spaces or try to request the IP manually (via API) and resume the failed workflow.

Hi Tom! First of all, we want to express our appreciation for your prompt assistance. We are planning to consult with our TAM to discuss the possibility of opening a service request for a thorough review.

Regarding the workaround you suggested, I have some concerns. We initially thought that the use of IP Space would be mandatory in VCD 10.5 (currently, we’re on version 10.4.2.2). On another note, when attempting to manually configure the same LB service in the GUI, we encounter the same error message. Do you believe that using the API to request the IP manually could yield better results?

Usage of IP Spaces is optional and you can convert to them later. It could be VCD bug if you see the same issue in UI. Best to open SR with VCD product then.

Got it, Tom! Thank you for your assistance. We’ve already initiated a case to investigate this.