With the upcoming end-of-life for the VMware NSX Migration fo VMware Cloud Director that has been announced here the source code and all the released builds are now available publicly on Calsoft GitHub repository: https://github.com/Calsoft-Pvt-Ltd/NSX_V2T_Migration_Tool. Calsoft engineers were developing the tool from the beginning so they do have the expertese to maintain the code and support those service providers that have not been able yet to migrate. If you are such a provider I encourage you to contact Calsoft: techsupport@calsoftinc.com.

Category: Cloud

VMware Cloud Director In-place Migration from NSX-V to NSX-T

VMware NSX Migration for VMware Cloud Director is a tool that automates migration of NSX-V backed Org VDCs to NSX-T backed provider VDC (PVDC). It is a side-by-side migration that requires standing up a new NSX-T cluster(s) to accommodate the migration. I described the process in my older blog.

However, there might be a situation that prevents this approach – either because hardware is not available or because only one cluster is available (VCD on top of VMware Cloud Foundation management domain).

We have derived and tested a procedure that enables in-place migration for such corner cases. It is not simple and requires the concurrent usage of NSX Migration Coordinator and NSX Migration for VCD. It also has some limitations but it can be an option when the regular side-by-site migration is not possible.

High Level Overview

At a high level the procedure is from VCD perspective still side by side and uses NSX Migration for VCD, but both source PVDC and target PVDC reside on the same cluster (with resource pool backing) while NSX Migration Coordinator is converting individual hosts from NSX-V to NSX-T and temporarily bridging VXLAN and Geneve networks.

Here are the high level steps:

- Make sure the source PVDC (NSX-V backed) has vSphere resource pool backing (instead of whole cluster). Also make sure VCD version is at least 10.4.2.2.

- Partially prepare target NSX-T Environment (deploy Managers and Edge Nodes)

- If load balancing is used, also partially prepare NSX ALB (deploy Controllers and configure NSX-T Cloud and Service Engine Groups)

- Create target PVDC NSX-T with resource pool backing on the same cluster

- Migrate network topology of all Org VDCs using the cluster with the NSX Migration for VCD (while using identical VNIs for the Geneve backed networks)

- Convert cluster ESXi hosts from NSX-V to T and migrate workloads to Geneve backed networks with NSX Migration Coordinator

- Migrate Org VDC network services with NSX Migration for VCD

- Migrate vApps with NSX Migration for VCD

- Clean up source Org VDCs with NSX Migration for VCD

- Clean up NSX-V from VCD and vCenter

Detailed Migration Steps

PVDC backing with Resource Pools

The source PVDC must be backed by resource pool (RP) otherwise we will not be able to create another PVDC on the same cluster. If the PVDC is using the whole cluster (its root RP) then it must be converted. The conversion is not so difficult but requires DB edit, therefore GSS support is recommended:

- Create a child RP under the vSphere cluster to be used as a PVDC RP.

- Move all PVDC child RPs (that were under the cluster directly, including System vDC RP) under this new RP.

- Note down the VC moref of this new RP.

- Update VCD vc_computehub and prov_vdc tables in VCD database to point the rp_moref to this new RP moref from VC (step 3).

If necessary, upgrade VCD to version 10.4.2.2 or newer.

Prepare NSX-T Environment

- Deploy NSX-T Managers

- Deploy NSX-T Edge Nodes. Note that the Edge node transport zone underlay (transport) VLAN must be routable with the existing VXLAN underlay (transport) VLAN (or it must be the same VLAN). The ESXi hosts when converted by NSX Migration Coordinator will reuse the VXLAN transport VLAN for its TEP underlay.

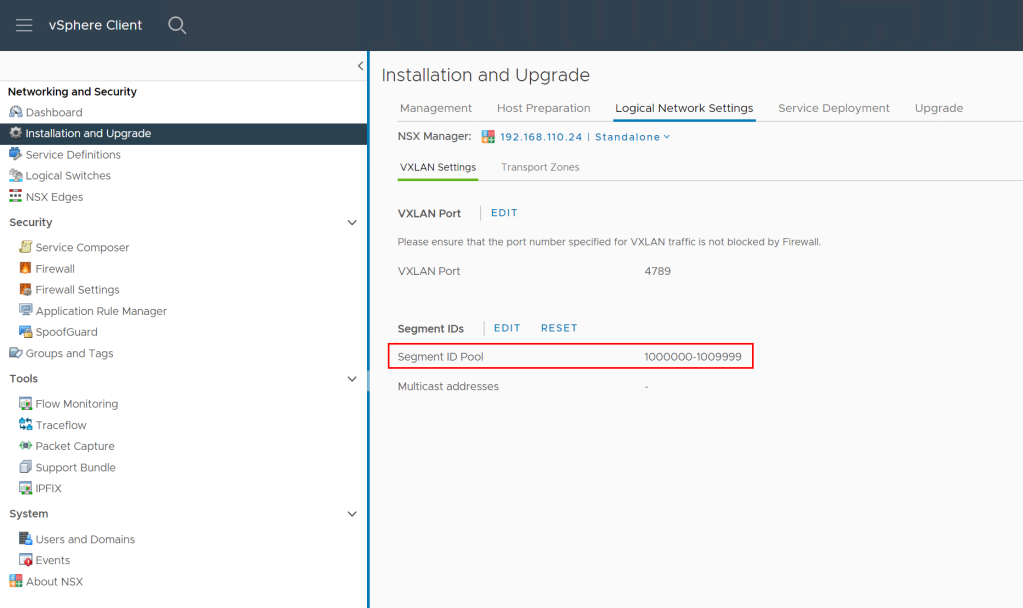

- Make sure that Geneve overlay ID VNI Pool interval contains the VXLAN VNIs used in NSX-V

- Create overlay transport zone

- Register NSX-T manager to VCD

- Register VC as Compute Manager in NSX-T Manager

- Create Geneve backed network pool in VCD (utilizing transport zone from step #4)

- Create Tier-0 and VRF gateways as necessary based on your desired target topology

- Create provider gateways in VCD using Tier-0 or VRF GWs created in step #8

Prepare NSX ALB Environment (optional)

This step is necessary only if load balancing is used.

- Deploy Avi Controllers and import the Avi Controller Cluster into VCD

- Prepare management segment and Tier-1 GW for Avi NSX-T Cloud

- Set up NSX-T Cloud in Avi and import it into VCD. Note that the Avi Controller will indicate the NSX-T Cloud is in red state as there are no NSX-T backed ESXi hosts in the environment yet.

- Set up Service Engine Groups as necessary and import them into VCD

Create Target Provider VDC

- In vCenter create sibling resource pool next to the RP backing the source PVDC

2. In VCD create the target NSX-T backed PVDC backed by the RP created in step #1 while using the Geneve backed network pool created previously. Yes it is possible this way to create NSX-T backed PVDC on NSX-V prepared cluster.

Topology Migration

With NSX Migration for VCD perform prechecks for all Org VDCs using the cluster. Skip bridging assessment as it is not necessary.

vcdNSXMigrator.exe –filepath=sampleuserinput.yml –passwordFile=passfile –preCheck -s bridging

If there are no issues, process with creation of target Org VDC network topology again for all Org VDCs on the cluster. In the input YAML file include CloneOverlayIds=TRUE line which will ensure that target networks use the same Overlay IDs as the source networks. This will allow Migration Coordinator associate the right networks.

If the topology includes direct VLAN backed Org VDC networks then also use LegacyDirectNetworks=TRUE. This statement will force NSX Migration for VCD to reuse the existing DVS portgroup backed external networks in the target Org VDCs. You also need to make sure that each such parent external network is used more than just one Org VDC network (for example via a dummy temporary Org VDC), otherwise the migration would be using the “Imported NSX-T Segment” mechanism which would not work as there is no ESXi host available yet that could access NSX-T Segment. See below table from the official doc:

The topology migration is triggered with the following command (must be run for each Org VDC):

vcdNSXMigrator.exe –filepath=sampleuserinput.yml –passwordFile=passfile -e topology

At this stage workloads are still running on NSX-V prepared hosts and are connected to VXLAN/VLAN backed port groups. The target Org VDCs are created and their network topology (Edge GWs and networks) exists only on Edge Transport Nodes as none of the resource ESXi hosts are yet prepared for NSX-T.

Note that you should not use Edge subinterface connection on source Org VDCs networks as it will not work later with Migration Coordinator. Also note that distributed routed networks on source side will experience extended downtime during migration as Migration Coordinator bridging will not work for them. You can convert them to direct interface Edge connectivity or alternatively accept the downtime.

Routed vApps (that use vApp Edge) should be powered off (undeployed). This is because vApp networks are not migrated during topology migration and thus will not exist during the Migration Coordinator step.

Migration Coordinator

In this step we will convert NSX-V hosts to NSX-T. This is done with NSX Migration Coordinator which will sequentially put each ESXi host into maintenance mode, evacuate the VMs (and connect them to VXLAN or Geneve networks) to other hosts. Then it will uninstall NSX-V and install NSX-T vibs on the host and bridge VXLAN and Geneve overlays directly in VMkernel.

When done, from vCenter perspective workloads will end up on the target networks but from VCD perspective they are still running in the source Org VDCs! The routing and other network services will still be performed by source Edge Gateways. In order to later migrate network services to NSX-T Tier-1 GWs, we must make sure that source Edge Service Gateway VMs stay on NSX-V prepared host so they are still manageable by NSX-V Manager (which uses ESXi host VIX/message bus communication). This is accomplished by creating vCenter DRS affinity groups.

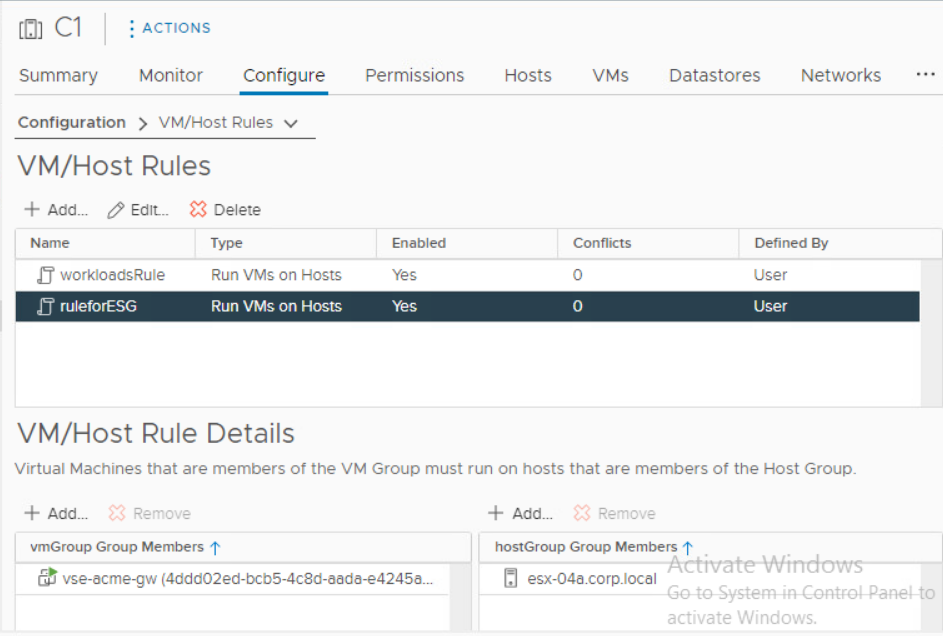

- Create DRS host group named EdgeHost that contains the last host in the cluster

- Create DRS host group named WorkloadHosts that contains all other hosts in the cluster

- Create DRS VM group named EdgeVMs that contains all ESG VMs

- Create DRS VM group named WorkloadVMs that containts all VCD managed workloads

- Create DRS VM/Host must rule for all EdgeVMs to run on EdgeHost

- Create DRS VM/Host must rule for all WorkloadVMs to run on Workload hosts

- Make sure that DRS vMotions all VMs as required (if you are using ESGs in HA configuration disable antiaffinity on them).

Now we can start the Migration Coordinator conversion:

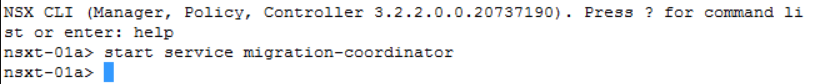

8. Enable Migration Coordinator on one of the NSX-T Manager Appliances via CLI command start service migration-coordinator

9. Access the NSX-T appliance via web UI and go to System > Lifecycle Management > Migrate and select the Advanced Migration mode DFW, Host and Workload.

- Import the source NSX-V configuration by connecting the vCenter and providing NSX-V credentials:

- Continue to resolving configuration issues. Accept the recommendations provided by the Migration Coordinator.

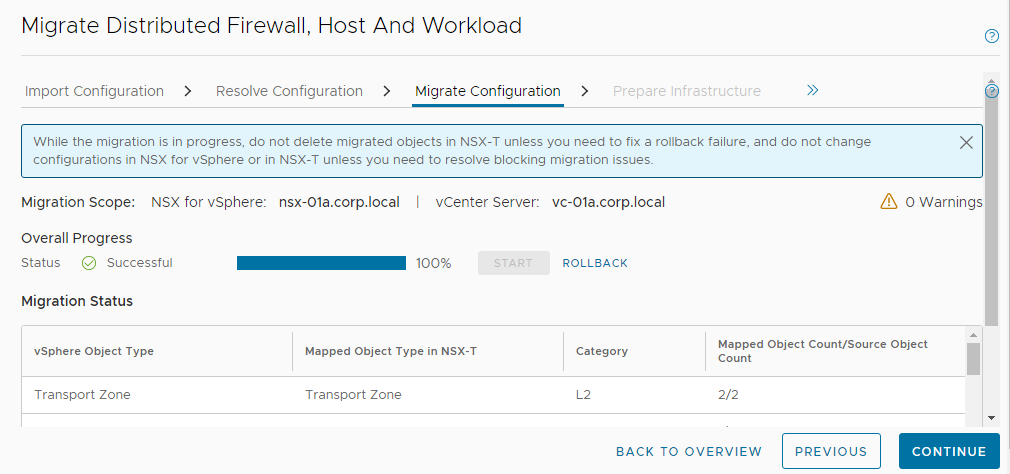

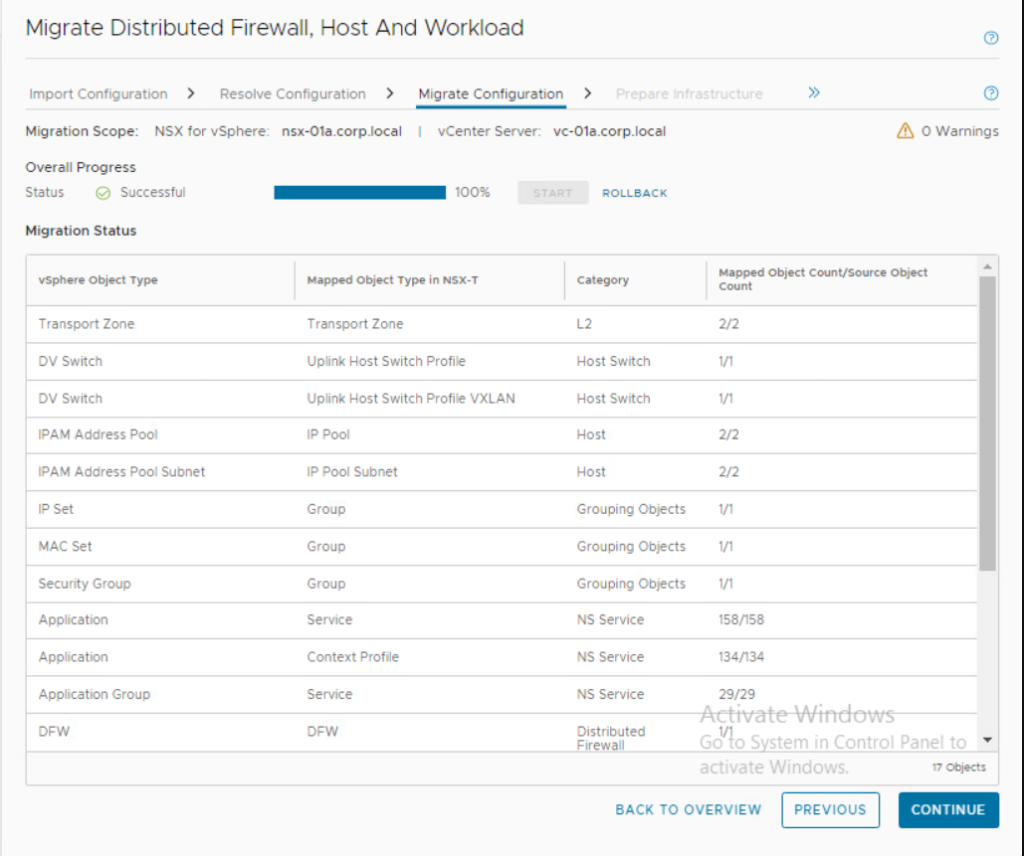

Note: The Migration Coordinator does not recognize the topology created by VCD hence there may be unknown objects like security groups in case DFW is used in VCD. We accept all the recommendations by the Migration Coordinator which is to skip the DFW rules migration. Not to worry, DFW will be migrated by NSX Migration for VCD in a later step. - Next we migrate configuration:

- Prepare infrastructure in the next step:

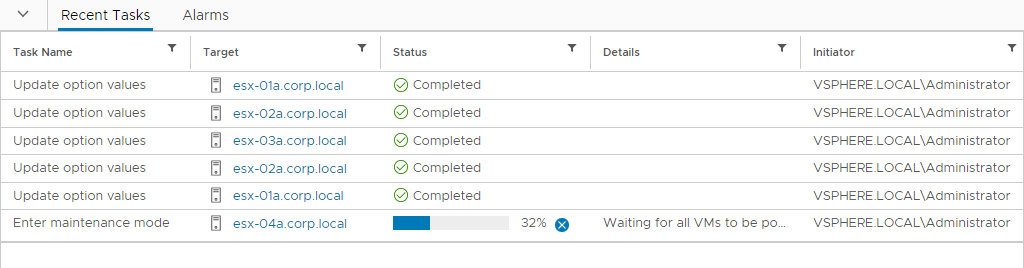

- And finally we arrive at Migrate Host step where the conversion of each ESXi host is performed. Make sure the ESG designated host is last in the sequence. Note that there is no rollback from this step on. So make sure you have tested this process in lab environment and you know what you are doing.

Either use Pause in between host option or let the migration run until the last host conversion. We will pause there (or cancel the VC action that tries unsuccessfully to put the ESG designated host into maintenance mode as there are DRS pinned VMs on it.

Network Services Migration and VCD vApp Reconciliation

Next, we need to migrate network services from NSX-V ESGs to Tier-1 GWs, migrate DFW and finally from VCD perspective migrate vApps to target Org VDCs.

For each Org VDC perform network services migration:

vcdNSXMigrator.exe –filepath=sampleuserinput.yml –passwordFile=passfile -e services

N/S network disconnection will be performed. NSX-V Edge Gateways will be disconnected from external networks. Source Org VDC networks will be disconnected from their NSX-V Edge Gateways and target Org VDC networks will be connected to NSX-T Tier-1 GWs and Tier-1 GWs will be connected to Tier-0 gateway. If LB is used the Service Engine deployment will happen during the phase automatically by Avi Controller.

For each Org VDC perform vApp migration step:

vcdNSXMigrator.exe –filepath=sampleuserinput.yml –passwordFile=passfile -e movevapp

Clean-up Phase

- Clean up all source Org VDCs with:

vcdNSXMigrator.exe –filepath=sampleuserinput.yml –passwordFile=passfile –cleanup - As the previous step deleted all NSX-V ESGs we can go back to NSX Migration Coordinator and convert the remaining host to NSX-T. We can also remove all DRS rules that we created for ESGs and VCD Workloads.

- Disable and remove source Provider VDC.

- In case there are no more NSX-V backed provider VDCs in the environment remove VXLAN network pools and unregister NSX-V from VCD as described here.

- If you migrated your last NSX-V cluster perform NSX-V cleanup from vSphere as described here: https://docs.vmware.com/en/VMware-NSX-T-Data-Center/3.2/migration/GUID-7A07429B-0576-43D0-8808-77FA7189F4AE.html

How to Scale Up NSX Advanced Load Balancer Cloud In VCD?

VMware Cloud Director relies on NSX Advanced Load Balancer (Avi) integration to offer load balancing as a service to tenants – more on that here. This article discusses a particular workaround to scale above the current NSX-ALB NSX-T Cloud limits which are 128 Tier-1 objects per NSX-T Cloud and 300 Tier-1 objects per Avi Controller cluster. As you can have up to 1000 Tier-1 objects in single NSX-T instance could we have load balancing service on each of them?

Yes, but let’s first recap the integration facts.

- VCD integrates with NSX-T via registration of NSX-T Managers

- The next step is creation of VCD Network Pool by mapping it to Geneve Transport Zone provided by the above NSX-T Managers

- Individual Org VDCs consume NSX-T resources for network creation based on assigned VCD Network Pool

- VCD integrates with NSX ALB via registration of Avi Controller Cluster

- NSX ALB integrates with NSX-T via NSX-T Cloud construct that is defined by NSX-T Manager, Overlay Transport Zone and vCenter Server

- NSX-T Cloud is imported into VCD and matched with an existing Network Pool based on the same NSX-T Manager/Overlay Transport Zone combination

- Service Engine Groups are imported from available NSX-T Clouds to be used as templates for resources to run tenant consumed LB services and are assigned to Edge Gateways

Until VCD version 10.4.2 you could map only single NSX-T Cloud to a Geneve Network Pool. However with VCD 10.5 and 10.4.2.2 and newer you can map multiple NSX-T Clouds to the same Geneve Network Pool (even coming from different Avi Controller clusters). This essentially allows you to have more than 128 Tier-1 load balancing enabled GWs per such Network Pool and with multiple NSX ALB instances could scale all the way to 1000 Tier-1 GWs.

The issue is that VCD currently is not smart enough to pick the most suitable NSX-T Cloud for placement from capacity perspective. The only logic VCD is using is the priorization based on the alphabetic ordering of NSX-T Clouds in the list. So it is up to the service provider to make sure that on top is the NSX-T Cloud with the most available capacity.

As can be seen above I used A- and B- prefixed names to change the prioritization. Note that the UI does not allow the name edit, API PUT call must be used instead.

Note: The assignable Service Engine Groups depend if the Edge Gateway (Tier-1) has already been assigned to a particular NSX-T Cloud or not. So the API endpoint will reflect that:

https://{{host}}/cloudapi/1.0.0/loadBalancer/serviceEngineGroups?filter=(_context==<gateway URN>;_context==assignable)

Lastly I want to state that the above is considered a workaround and a better capacity management will be address in the future VCD releases.

Legacy API Login Endpoint Removed in VCD 10.5

A brief heads up note that with the release of VMware Cloud Director 10.5 and its corresponding API version 38.0 the legacy API endpoint POST /api/sessions has been removed.

It has been announced for deprecation for some time (actually it was initially planned to be removed in VCD 10.4) and I blogged in detail about the replacement /cloudapi/1.0.0/sessions and /cloudapi/1.0.0/sessions/provider endpoints in the Control System Admin Access blog post.

Any 3rd party tools that use the legacy endpoint and API version 38.0 will get HTTP response 405 – Method not found.

Note that the GET /api/session method will still work until all legacy APIs will be moved to /cloudapi.

Also due to backward compatibility API versions 37.2 – 35.2 still provide the legacy endpoint even with VCD 10.5.

New Networking Features in VMware Cloud Director 10.4.1

The recently released new version of VMware Cloud Director 10.4.1 brings quite a lot of new features. In this article I want to focus on those related to networking.

External Networks on Org VDC Gateway (NSX-T Tier-1 GW)

External networks that are NSX-T Segment backed (VLAN or overlay) can now be connected directly to Org VDC Edge Gateway and not routed through Tier-0 or VRF the Org VDC GW is connected to. This connection is done via the service interface (aka Centralize Service Port – CSP) on the service node of the Tier-1 GW that is backing the Org VDC Edge GW. The Org VDC Edge GW still needs a parent Tier-0/VRF (now called Provider Gateway) although it can be disconnected from it.

What are some of the use cases for such direct connection of the external network?

- routed connectivity via dedicated VLAN to tenant’s co-location physical servers

- transit connectivity across multiple Org VDC Edge Gateways to route between different Org VDCs

- service networks

- MPLS connectivity to direct connect while internet is accessible via shared provider gateway

The connection is configured by the system administrator. It can use only single subnet from which multiple IPs can be allocated to the Org VDC Edge GW. One is configured directly on the interface while the others are just routed through when used for NAT or other services.

If the external network is backed by VLAN segment, it can be connected to only one Org VDC Edge GW. This is due to a NSX-T limitation that a particular edge node can use VLAN segment only for single logical router instantiated on the edge node – and as VCD does not give you the ability to select particular edge nodes for instantiation of Org VDC Edge Tier-1 GWs it simply will not allow you to connect such external network to multiple Edge GWs. If you are sharing the Edge Cluster also with Tier-0 GWs make sure that they do not use the same VLAN for their uplinks too. Typically you would use VLAN for co-location or MPLS direct connect use case.

Overlay (Geneve) backed external network has no such limitations which makes it a great use case for connectivity across multiple GWs for transits or service networks.

NSX-T Tier-1 GW does not provide any dynamic routing capabilities, so routing to such network can be configured only via static routes. Also note that Tier-1 GW has default route (0.0.0.0/0) always pointing towards its parent Tier-0/VRF GW. Therefore if you want to set default route to the segment backed external network you need to use two more specific routes. For example:

0.0.0.0/1 next hop <MPLS router IP> scope <external network>

128.0.0.0/1 next hop <MPLS router IP> scope <external network>

Slightly related to this feature is the ability to scope gateway firewall rules to a particular interface. This is done via Applied To field:

You can select any CSP interface on the Tier-1 GW (used by the external network or non-distributed Org VDC networks) or nothing, which means the rule will be applied to uplink to Tier-0/VRF as well as to any CSP interface.

IP Spaces

IP Spaces is a big feature that will be delivered across multiple releases, where 10.4.1 is the first one. In short IP Spaces are new VCD object that allows managing individual IPs (floating IPs) and subnets (prefixes) independently across multiple networks/gateways. The main goal is to simplify the management of public IPs or prefixes for the provider as well as private subnets for the tenants, however additional benefits are being able to route on shared Provider Gateway (Tier-0/VRF), use same dedicated parent Tier-0/VRF for multiple Org VDC Edge GWs or the ability to re-connect Org VDC Edge GWs to a differeent parent Provider Gateway.

Use cases for IP Spaces:

- Self-service for request of IPs/prefixes

- routed public subnet for Org VDC network on shared Provider Gateway (DMZ use case, where VMs get public IPs with no NATing performed)

- IPv6 routed networks on a shared Provider Gateway

- tenant dedicated Provider Gateway used by multiple tenant Org VDC Edge Gateways

- simplified management of public IP addresses across multiple Provider Gateways (shared or dedicated) all connected to the same network (internet)

In the legacy way the system administrator would create subnets at the external network / provider gateway level and then add static IP pools from those subnet for VCD to use. IPs from those IP pools would be allocated to tenant Org VDC Edge Gateways. The IP Spaces mechanism creates standalone IP Spaces (which are collections of IP ranges (e.g. 192.168.111.128-192.168.111.255) and blocks of IP Prefixes(2 blocks of 192.168.111.0/28 – 192.168.111.0/28, 192.168.111.16/28 and 1 block of 192.168.111.32/27).

A particular IP Space is then assigned to a Provider Gateway (NSX-T Tier-0 or VRF GW) as IP Space Uplink:

An IP Space can be used by multiple Provider Gateways.

The tenant Org VDC Edge Gateway connected to such IP Space enabled provider gateway can then request floating IPs (from IP ranges)

or assign IP block to routable Org VDC network which results into route advertisement for such network.

In the above case such network should be also advertised to the internet, the parent Tier-0/VRF needs to have route advertisement manually configured (see below IP-SPACES-ROUTING policy) as VCD will not do so (contrary to NAT/LB/VPN case).

Note: The IP / IP Block assignments are done from the tenant UI. Tenant needs to have new rights added to see those features in the UI.

The number of IPs or prefixes the tenant can request is managed via quota system at the IP Space level. Note that the system administrator can always exceed the quota when doing the request on behalf of the tenant.

A provider gateway must be designated to be used for IP Spaces. So you cannot combine the legacy and IP Spaces method of managing IPs on the same provider gatway. There is currently no way of converting legacy provider gateway to the one IP Spaces enabled, but such functionality is planned for the future.

Tenant can create their own private IP Spaces which are used for their Org VDC Edge GWs (and routed Org VDC networks) implicitly enabled for IP Spaces. This simplifies the creation of new Org VDC networks where a unique prefix is automatically requested from the private IP Space. The uniqueness is important as it allows multiple Edge GWs to share same parent provider gateway.

Transparent Load Balancing

VMware Cloud Director 10.4.1 adds support for Avi (NSX ALB) Transparent Load Balancing. This allows the pool member to see the source IP of the client which might be needed for certain applications.

The actual implementation in NSX-T and Avi is fairly complicated and described in detail here: https://avinetworks.com/docs/latest/preserve-client-ip-nsxt-overlay/. This is due to the Avi service engine data path and the need to preserve the client source IP while routing the pool member return traffic back to the service engine node.

VCD will hide most of the complexity so that to actually enable the service three steps need to be taken:

- Transparent mode must be enabled at the Org VDC GW (Tier-1) level.

2. The pool members must be created via NSX-T Security Group (IP Set).

3. Preserve client IP must be configured on the virtual service.

Due to the data path implementation there are quite many restrictions when using the feature:

- Avi version must be at least 21.1.4

- the service engine group must be in A/S mode (legacy HA)

- the LB service engine subnet must have at least 8 IPs available (/28 subnet – 2 for engines, 5 floating IPs and 1 GW) – see Floating IP explanation below

- only in-line topology is supported. It means a client that is accessing the LB VIP and not going via T0 > T1 will not be able to access the VS

- only IPv4 is supported

- the pool members cannot be accessed via DNAT on the same port as the VS port

- transparent LB should not be combined with non-transparent LB on the same GW as it will cause health monitor to fail for non-transparent LB

- pool member NSX-T security group should not be reused for other VS on the same port

Floating IPs

When transparent load balancing is enabled the return traffic from the pool member cannot be sent directly to the client (source IP) but must go back to the service engine otherwise asymmetric routing happens and the traffic flows will be broken. This is implemented in NSX-T via N/S Service Insertion policy where the pool member (defined via security group) traffic is instead to its default GW redirected to the active engine with a floating IP. Floating IPs are from the service engine network subnet but are not from the DHCP range which assigns service engine nodes their primary IP. VCD will dedicate 5 IP from the LB Service network range for floating IPs. Note that multiple transparent VIPs on the same SEG/service network will share floating IP.