There has been more and more interest lately among service providers in usage of VMware Cloud Foundation (VCF) as the underlying virtualization platform in their datacenter. VCF is getting more and more mature and offers automated lifecycle capabilities that service providers appreciate when operating infrastructure at scale.

I want to focus on the topic how would you design and deploy VMware Cloud Director (VCD) on top of VCF with a specific example. While there are whitepaper on this topic written they do not go into the nitty gritty detail. This should not be considered as prescribed architecture – just one way to skin a cat that should inspire you for your own design.

VCF 4.0 consists of a management domain – smaller infrastructure with one vSphere 7 cluster , NSX-T 3 and vRealize components (vRealize Suite Lifecycle Manager, vRealize Operations Manager, vRealize Log Insight). It is also used for deployment of management components for workload domains, which are separate vSphere 7+NSX-T 3 environments.

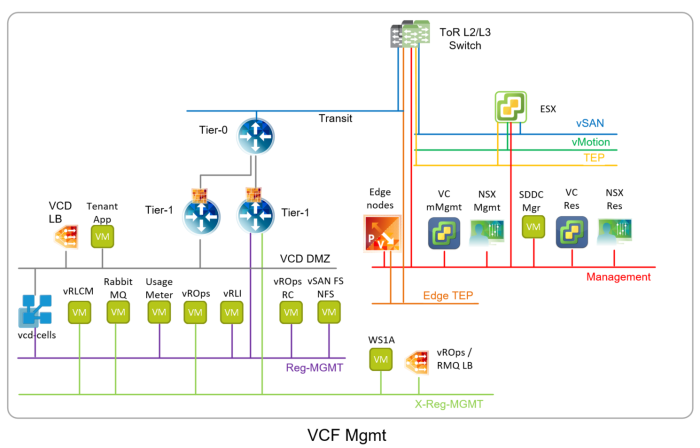

VCF has prescribed architecture based on VMware Validated Designs (VVD) how all the management components are deployed. Some are on VLAN backed networks but some are on overlay logical segments created in NSX-T (VVD calls them application virtual networks – AVN) and routed via NSX-T Edge Gateways. The following picture shows typical logical architecture of the management cluster which we will start with:

Reg-MGT and X-Reg-MGMT are overlay segments, rest are VLAN networks.

VC Mgmt … Management vCenter Server

VC Res … Workload domain (resource) vCenter Server

NSX Mgmt … Management NSX-T Managers (3x)

Res Mgmt … Workload domain (resource) NSX-T Managers (3x)

SDDC Mgr … SDDC Manager

Edge Nodes … NSX-T Edge Nodes VMs (2x) that provide resources for Tier-0, Tier-1 gateways and Load Balancer

vRLCM … vRealize Suite Lifecycle Manager

vROps … vRealize Operation Managers (two or more nodes)

vROps RC … vRealize Operation Remote Collectors (optional)

vRLI … vRealize Log Insight (two or more nodes)

WS1A … Workspace ONE Access (former VIDM, one or more nodes)

Now we are going to add VMware Cloud Director solution. I will focus on the following components:

- VCD cells

- RabbitMQ (needed for extensibility such as vROps Tenant App or Container Service Extension)

- vRealize Operations Tenant App (provides multitenant vROps view in VCD and Chargeback functionality)

- Usage Meter

I have followed these design principles:

- VCD solution will utilize overlay (AVN) networks

- leverage existing VCF infrastructure when it makes sense

- consider future scalability

- separate internet traffic from the management one

And here is the proposed design:

New overlay segment (AVN) called VCD DMZ has been added to separate the internet traffic. It is routed via separate Tier-1 GW but connected to the existing Tier-0. VCD cells (3 or more) have their primary (eth0) interface on this network with NSX-T Load balancer (running in its own Tier-1 similar to the vROps one). And finally vRealize Operations Tenant App VM.

New overlay segment (AVN) called VCD DMZ has been added to separate the internet traffic. It is routed via separate Tier-1 GW but connected to the existing Tier-0. VCD cells (3 or more) have their primary (eth0) interface on this network with NSX-T Load balancer (running in its own Tier-1 similar to the vROps one). And finally vRealize Operations Tenant App VM.

Existing Reg-Mgmt is used for the secondary interface of VCD cells, Usage Meter VM and for vSAN File Services NFS share that VCD cell require.

And finally the cross region X-Reg-MGMT is utilized for RabbitMQ nodes (2 or more) in order to leverage existing vROps Load Balancer and get away with deploying additional one just for RabbitMQ.

Additional notes:

- VCF deploys two NSX-T Edge nodes in 2-node NSX-T Edge Cluster. These currently cannot easily be scaled out. Therefore I would recommend deploying additional Edge nodes in separate NSX-T Edge cluster (directly in NSX-T) for the DMZ Tier-1 gateway and VCD load balancer. This guarantees compute and networking resources especially for the load balancer that will perform SSL termination (might not apply if you chose to use different load balancer e.g. Avi). This will also add possibility to deploy separate Tier-0 for more N/S bandwidth.

- vSAN FS NFS deployment is described here. Do not forget to enable MAC learning on the Reg-MGMT NSX-T logical segment (via segment profile).

- Both Tier-1 gateways can provide north-south firewalling for additional security

- As all the incoming internet traffic to VCD goes over the VCD load balancer which provides Source NAT I have opted to have default route on the VCD cells on the management interface to get away with any need for static routes necessary to separate tenant and management traffic

Let me know in the comments if you plan VCD on VCF and if you are facing any challenges.